Nye publikasjoner

Kardiologer har trent opp en stor AI-modell for å vurdere hjertets struktur og funksjon

Sist anmeldt: 02.07.2025

Alt iLive-innhold blir gjennomgått med medisin eller faktisk kontrollert for å sikre så mye faktuell nøyaktighet som mulig.

Vi har strenge retningslinjer for innkjøp og kun kobling til anerkjente medieområder, akademiske forskningsinstitusjoner og, når det er mulig, medisinsk peer-evaluerte studier. Merk at tallene i parenteser ([1], [2], etc.) er klikkbare koblinger til disse studiene.

Hvis du føler at noe av innholdet vårt er unøyaktig, utdatert eller ellers tvilsomt, velg det og trykk Ctrl + Enter.

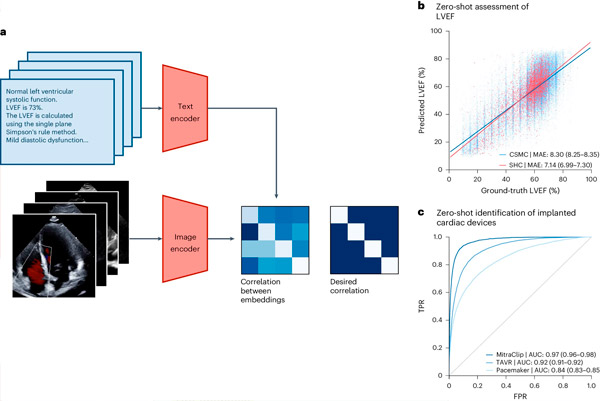

Eksperter på kunstig intelligens ved Cedars-Sinai og Smidt Heart Institute har laget et datasett med mer enn 1 million ekkokardiogrammer (videoultralyd av hjertet) og tilhørende kliniske tolkninger. Ved hjelp av denne databasen utviklet de EchoCLIP, en kraftig maskinlæringsalgoritme som kan «tolke» ekkokardiogrambilder og vurdere viktige målinger.

Utformingen og evalueringen av EchoCLIP, beskrevet i en artikkel publisert i Nature Medicine, antyder at tolkning av en pasients ekkokardiogram ved hjelp av EchoCLIP gir kliniske vurderinger på spesialistnivå, inkludert vurdering av hjertefunksjon, resultater av tidligere operasjoner og implanterte enheter, og kan hjelpe leger med å identifisere pasienter som trenger behandling.

EchoCLIP-basismodellen kan også identifisere den samme pasienten på tvers av flere videoer, studier og tidspunkter, og gjenkjenne klinisk viktige endringer i pasientens hjerte.

«Så vidt vi vet er dette den største modellen som er trent på ekkokardiografibilder », sa hovedforfatter av studien, David Ouyang, MD, et fakultetsmedlem i avdelingen for kardiologi ved Smidt Heart Institute og avdelingen for kunstig intelligens i medisin.

«Mange tidligere AI-modeller for ekkokardiogrammer er trent på bare titusenvis av eksempler. I motsetning til dette er EchoCLIPs unikt høye ytelse innen bildetolkning et resultat av trening på nesten ti ganger mer data enn eksisterende modeller.»

«Resultatene våre viser at store datasett med medisinsk avbildning og ekspertverifiserte tolkninger kan tjene som grunnlag for trening av grunnleggende medisinske modeller, som er en form for generativ kunstig intelligens», la Ouyang til.

EchoCLIP-arbeidsflyt. Kilde: Nature Medicine (2024). DOI: 10.1038/s41591-024-02959-y

Han bemerket at denne avanserte grunnlinjemodellen snart kan hjelpe kardiologer med å evaluere ekkokardiogrammer ved å generere estimater av hjertemålinger, identifisere endringer over tid og vanlige sykdommer.

Forskningsteamet opprettet et datasett med 1 032 975 ultralydvideoer av hjertet og tilhørende eksperttolkninger for å utvikle EchoCLIP. Viktige funn fra studien inkluderer:

- EchoCLIP viste høy ytelse i vurderingen av hjertefunksjon fra hjertebilder.

- Basismodellen var i stand til å identifisere implanterte intrakardiale enheter som pacemakere, mitralklaffimplantater og aortaklaffimplantater fra ekkokardiogrambilder.

- EchoCLIP identifiserte nøyaktig unike pasienter på tvers av studier, oppdaget klinisk viktige endringer som tidligere hjertekirurgi, og muliggjorde utvikling av foreløpige teksttolkninger av ekkokardiogrambilder.

«Grunnleggende modeller er et av de nyeste områdene innen generativ AI, men de fleste modellene har ikke nok medisinske data til å være nyttige i helsevesenet», sa Christina M. Albert, MD, MPH, leder for avdelingen for kardiologi ved Smidt Heart Institute.

Albert, som ikke var involvert i studien, la til: «Denne nye grunnlinjemodellen integrerer datasyn for tolkning av ekkokardiogrambilder med naturlig språkbehandling for å forbedre kardiologers tolkninger.»